The 14 International Conference on Computer and IT Applications

in the Maritime Industries (COMPIT) will be held from 11–13 May 2015

in Ulrichshusen/Germany. A preview by organizer of

Tutech Innovation GmbH

First held in the year 2000, COMPIT has established itself as a key conference in information technology (IT) for the[ds_preview] maritime industries, bringing together software developers and users. Most participants come from industry, reflecting the practical relevance of the event. The main trends in IT for the maritime industries are E-navigation as precursor to unmanned ships, Super, Mega, Exa – Redefine supercomputing and Big data enters the scene.

E-navigation (or sea traffic management) can be seen as a maritime equivalent to air traffic control. Many tasks of nautical planning and monitoring are then shifting to land-based control centers. E-navigation is a key step towards unmanned shipping. In parallel, unmanned surface vessels are used as platforms to develop and test algorithms for autonomous navigation. It seems as if it is only a matter of time before we will indeed see unmanned cargo ships. Computing power continues to grow exponentially. This opens new applications. CFD is one technology that is particularly greedy in terms of computational power. Frontier applications already use massive computer architectures with 200,000 cores in parallel. The third trend, Big data, uses simpler algorithms, but huge amounts of data. Processing all this data is not the problem anymore. Turning the data into useful information is the challenge. Applications appear in fleet operation and intelligent maintenance schemes.

COMPIT covers three full days. Traditionally each day is dedicated to a major phase in the lifecycle of ships:

Day 1 – ship design with sessions on: »hull models«, »simulation-based design«, »coupled simulations«, and »high-performance computing for CFD«

Day 2 – ship lifecycle management and production with sessions on: »product lifecycle management«, »IT for ship production & ship repair«, »Virtual & augmented reality«, »marine (swarm) robotics«

Day 3 – ship operation with sessions on: »Ship routing«, »Big data & Performance insight«, »Sea traffic management«, »Towards unmanned shipping«

Ships are 3D objects –

right from the beginning

3D ship modelling has been advocated since the very first Compit in the year 2000. Quiet and continuous work marked the way, and now 3D modelling in ship design has been chosen as the opening session of Compit 2015. A bit boring, isn’t it? Not for the expert … and neither for the accountant. 3D modelling is the backbone of all simulations and virtual reality applications, the glamorous sisters in the advanced IT application family. And 3D models save time and money, seriously. Cabos and Tietgen (DNV GL) demonstrate this convincingly in »3D Ship Design from the Start – An Industry Case Study«. The extra effort for creating a 3D model in the early design phase is more than balanced by the higher efficiency in subsequent tasks. The paper describes coupling NAPA Steel and POSEIDON for model re-use and the application in an actual newbuilding project of a major Korean shipyard.

Darren Larkins et al. (SSI) support the same message in »Utilizing a Single 3D Product Model through the Design Process«. Their paper shows the advantages gained by various companies that have utilized this approach via the Marine Information Model in SSI’s ShipConstructor software. Lindner et al. (Rostock University, TKMS and FSG) describe in »A Modular System Architecture for the Early Ship Design by combining a 3D-CAD-System with a Product Data Management System« how 3D ship modelling is used by two German ship yards with very different product portfolios. So »3D modelling right from the start« is entering industry practice, in Europe, in America and in the Far East.

Herbert Koelman (SARC) gives an example how cooperation between software and software companies is implemented in the Netherlands: »A Virtual Single Ship-Design System Composed of Multiple Independent Components« describes a pilot case where a general CAD program (Eagle, as used by Conoship), a specific ship design program (PIAS by SARC) and a CAE system (NUPAS by NCG) are linked into the loop. The conclusion is that coupling dedicated software packages is a better strategy than trying to develop monolithic »one code fits them all« ship design software. Or in short: cooperation beats integration.

Plug-and-play – loosely coupled interdisciplinary simulations

Assorted engineering simulations have always played a key role at Compit. Less mature simulation technologies focus on demonstrations of what has become possible. Very mature technologies, such as finite-element analyses (FEA), focus on rapid, cost-effective model generation. The trend is towards multi-disciplinary applications. As for CAD applications, the preferred approach is via loosely coupled applications. A »plug-and-play« culture is developing where software codes work together like individual companies within a larger holding. User-friendly design shells such as CAESES (ex FRIENDSHIP Framework) and exponentially growing computer power (largely through parallel computing on many processors at the same time) have encouraged this development.

Super, mega, peta, exa –

redefine supercomputing

Computing power is growing at a staggering rate. Currently, the most powerful computer, Tianhe-2, capable of 33 Peta-Flops, consists of some 3 million cores. (Your PC is likely to have two or four cores. DNV GL’s parallel cluster as the biggest in the maritime world has 7,600 cores.) The first computer capable of 1018 floating-point operations per second (1 Exa-FLOPS) is expected to arrive in around 2020. This so-called exascale machine is likely to have 300 times more cores than the Tianhe-2. In short, the architecture of future supercomputers will be much more parallel than in the past. And number-crunching software must adapt accordingly. Computational Fluid Dynamics (CFD) is spearheading this development.

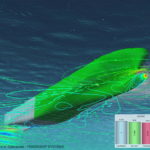

Milovan Peric (CD-adapco) gives a perspective on the development in »From Single-Processing to Massively Parallel CFD«, extrapolating from two decades of personal experience with pushing the frontiers in CFD, not only in the maritime industries. Tatsuo Nishikawa (Ship Research Center of Japan) gives us a glimpse of the future with »Application of Fully resolved Large Eddy Simulation to Self-Propulsion Test Condition of Double-Model KVLCC2«.

The K-computer is the largest computer in Japan, currently the fifth largest computer in the world. This powerful machine allows the simulation of numerical ship propulsion tests resolving even the characteristic turbulence structures in time and space. The simulations use 60 bill. cells and 200,000 cores in parallel. For perspective, this is 10,000 (!) times the number of cells and cores we typically employ at present in industry applications. A decade may easily pass before such computing power becomes widely available in our industry. In the meantime, we can start using »the cloud« and renting computing power and the massively parallel software licenses required for such computations.

Hildebrandt et al. (Numeca and CPU 24/7) present how this might work in »Business and Technical Adaptivity in Marine CFD Simulations«. The technical adaptivity refers to adaptive grids, which give resolution on demand. This evolving technique leads to more efficient use of existing computer capacity. But the real story lies in the »pay on demand« business model which could become a fundamental game-changer giving access to advanced CFD also for small and medium enterprises.

And for dessert, Caponnetto et al. present »The Role of CFD in the Design of America’s Cup Yachts«. For the America’s Cup, the Formula-1 equivalent in naval architecture, the classical towing tank test has been replaced completely by the Numerical Towing Tank – not least thanks to the availability of high-performance computing. In fact, the classical towing tank has been replaced by hundreds or thousands of numerical towing tanks that test design variants in parallel.

Big Data – the new superstar on the horizon

»Big Data is like teenage sex: everyone talks about it, nobody really knows how to do it,« [Dan Ariely, professor at Duke University]. Well, at least now we talk about it and as time goes by we may get the hang of it. More data has been created in the last four years than since the beginning of time. This data is created by machines, such as AIS data, machinery sensors, embedded chips, … »Big Data« is high-volume, high-speed and high-variety data that is difficult to process using traditional tools. Big Data may help us with business intelligence, predictive maintenance, streamlining traffic flows and other performance enhancement. Whether we like it or not, Big Data is entering the maritime industry and it is time that we understood it better. Dausendschön’s »Big Data – Business Insight Building on AIS Data« gives some concrete examples how Big Data analyses can help ports and ship operator to improve operational performance and gain business insight on competitors. Knutsen et al. focus on data structures for condition monitoring and predictive maintenance in »Implementing a Hadoop Infrastructure for Next-Generation Collection of Ship Operational Data«. (Apache Hadoop is a popular software platform for Big Data applications.) Koch et al. (Atlantec Enterprise Solutions & Lloyd’s Register) also cover Big Data maintenance decision for machinery and equipment in »Improving Machinery & Equipment Life Cycle Management Processes«. Collectively this session will present an introduction to the terminology and potential solutions for Big Data in the maritime industries.

The enigmatic beauty of e-navigation – MONALISA

The EU-project MONALISA 2.0 focusses on e-navigation, or the now preferred term Sea Traffic Management (STM), in essence the maritime equivalent of air traffic management, where land-based control centres have extensive insight into ship data and rights in routing ships. This shall support safer shipping, but also better logistics and fuel efficiency. Siwe et al. (Swedish Maritime Administration, Viktoria ICT) give an overview of the vision and achieved milestones of the project in »Sea Traffic Management – Concept and Components«. Sea System Wide Information Management (SeaSWIM), is the key infrastructure and will provide a basis for information exchange. SeaSWIM has four functional sub-concepts: Strategic Voyage Management, Dynamic Voyage Management, Flow Management and Port Cooperative Decision Making. The paper describes how each part of the concept will be driven, by authorities, by international organisations and by service providers. Subsequent papers expand on individual aspects of the project, including legal, IT and psychological hurdles. We may look forward to the reaction of various stakeholders to these ideas. And then comes the unmanned ship …

Sense and nonsense of unmanned shipping

We already have totally automated trains with no conductors, and there are advanced studies to have airplanes and cars with no one flying or driving them. Could this be possible for ships? What could be the advantages and disadvantages? Giampiero Soncini (SpecTec) discusses »Sense and Nonsense of No-crew, Low-crew Ships« looking at what is possible, what is feasible and what is only a dream (for now). Besides legal and political hurdles, there are still many technical issues to be solved before we may see unmanned cargo shipping; however, the required technology for assorted sub-tasks is evolving. Whether you support the »sense« fraction or the »nonsense« fraction, you will have to admit that the topic is interesting and the way to consensus and the future is through informed discussion – Compit is the perfect forum for this

Volker Bertram